Gaze following, in the sense of continuously measuring (with a greater or a lesser degree of anticipation) the head pose and gaze direction of an interlocutor so as to determine his/her focus of attention, is important in several important areas of computer vision applications, such as the development of non-intrusive gaze-tracking equipment for psychophysical experiments in Neuroscience, specialized telecommunication devices, Human-Computer Interfaces (HCI) and artificial cognitive systems for Human-Robot Interaction (HRI).

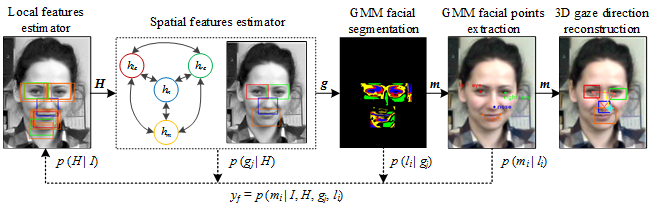

The gaze following image processing chain, depicted in Fig. 1, contains four main steps. We assume that the input is an 8-bit gray-scale image , of width

and height

, containing a face viewed either from a frontal or profile direction, where

.

represents the 2D coordinates of a specific pixel. The face region is obtained from a face detector.

Firstly, a set of facial features ROI hypotheses , consisting of possible instances of the left

and right

eyes, nose

and mouth

, are extracted using a local features estimator which determines the probability measure

of finding one of the searched local facial region. The number of computed ROI hypotheses is governed by a probability threshold

, which rejects hypotheses with a low

confidence measure. The choice of the

threshold is not a trivial task when considering time critical systems, such as the gaze estimator, which, for a successful HRI, has to deliver in real-time the 3D gaze orientation of the human subject. The lower

is, the higher the computation time. On the other hand, an increased value for

would reject possible "true positive" facial regions, thus leading to a failure in gaze estimation. In order to obtain a robust value for the hypotheses selection threshold, we have chosen to adapt

with respect to the confidences provided by the subsequent estimators from Fig. 1, which take as input the facial regions hypotheses. The output probabilities coming from these estimation techniques, that is, the spatial estimator and the GMM for point-wise feature extraction, are used in a feedback manner within the extremum seeking control paradigm.

Once the hypotheses vector has been build, the facial features are combined into the spatial hypotheses

, thus forming different facial regions combinations. Since one of the main objective of the presented algorithm is to identify facial points of frontal, as well as profile faces, a spatial vector

is composed either from four, or three, facial ROIs:

where .

The extraction of the best spatial features combination can be seen as a graph search problem , where

are the edges of the graph connecting the hypotheses in

. The considered features combinations are illustrated in Fig. 2. Each combination has a specific spatial probability value

given by a spatial estimator trained using the spatial distances between the facial features from a training database.

References

S.M. Grigorescu and F. Moldoveanu "Human-Robot Interaction through Robust Gaze Following", Memoirs of the Scientific Sections of the Romanian Academy, 2016 (to be published).

Latex Bibtex Citation

@inproceedings{grigorescu2016human,

author = {Grigorescu, Sorin M and Macesanu, Gigel},

title = {Human--Robot Interaction Through Robust Gaze Following},

booktitle = {Congress on Information Technology, Computational and Experimental Physics},

pages = {165--178},

year = {2016},

organization = {Springer},

}